With all the criticism and chaos surrounding the AI moderation mess and content crackdowns last year, the tech giant made a pretty big decision. They pulled the plug on their third-party fact-checking system and instead went all in on the Community Notes model. Mark Zuckerberg said that the platform “reached a point where it's just too many mistakes and too much censorship".

That's a pretty radical bet on the idea that users are wiser than corporate suits, and to be honest, we were curious to see how it would play out.

Before we dive in though, heads up to those who aren’t tuned into our Telegram channel.

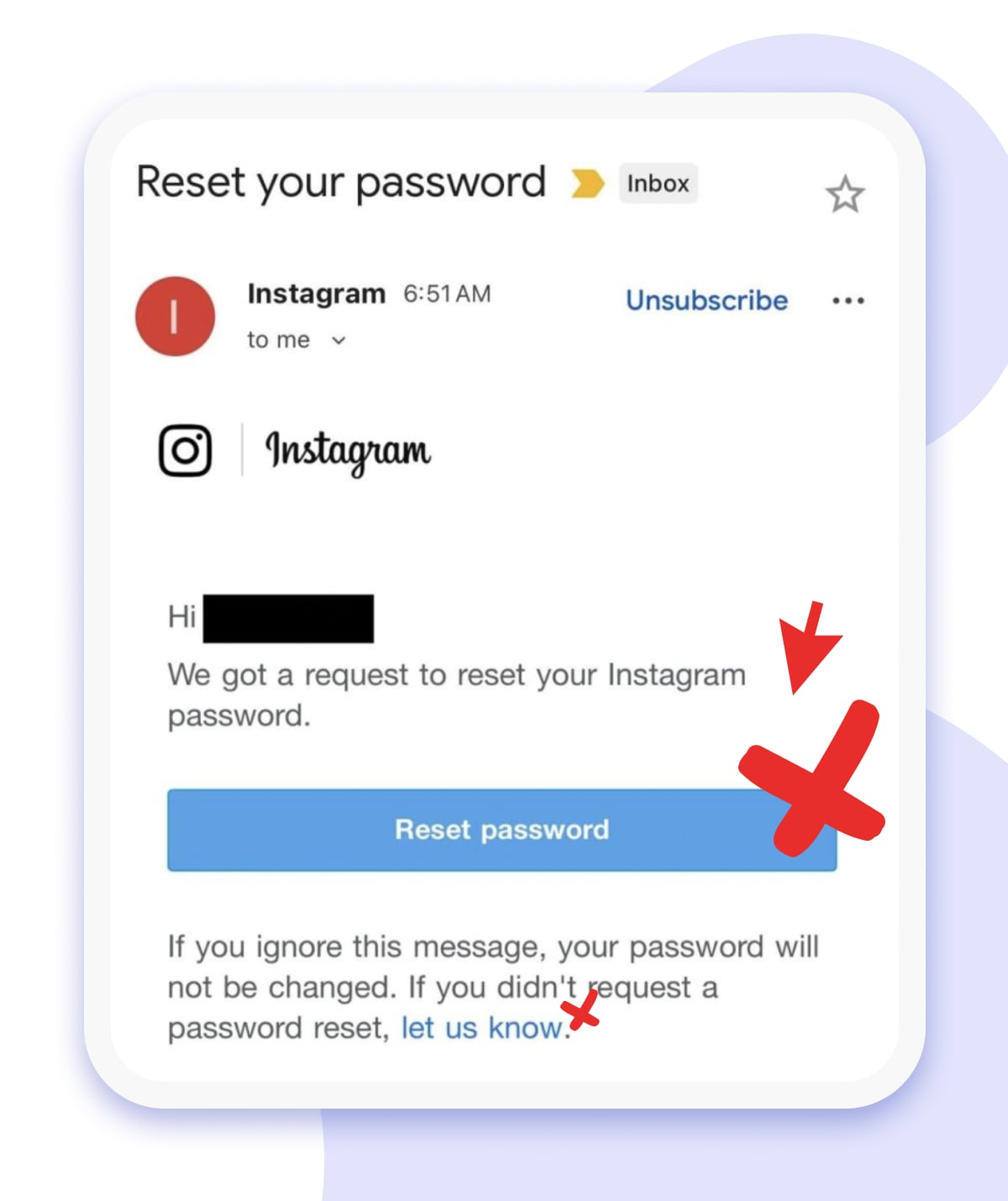

It’s come to light that user data from a whopping 17.5 million Instagram users has somehow ended up on the dark web, which is basically a treasure trove for cybercriminals looking to launch phishing scams. Your account could be next, so take a few minutes to check out our guide to protect your profile.

Now that we've got a bit of a side note out of the way, let’s get back to where we left off.

The Moderation Timeline of How We Got Here

Facebook’s approach to content moderation until 2016 was user-driven. In other words, the platform was dealing with the specific requests from users without filtering uploads.

A great shift happened when the platform faced accusations that the platform’s policy of taking no grab over unverified info had influenced the US presidential election. In response to that third-party organizations, which we know as fact checkers, stepped in to address fakes.

With the AI-technology rising, Meta later introduced neural networks to proactively detect unwanted content. Keyword Alert systems were validating every post to stay within community standards. These tools were not only detecting objectionable content, but also preventing such publications by notifying about necessary changes or completely removing suspicious media.

Why Proactive Scanning Became User’s Nightmare

Automated checking for billions of posts daily was probably the most efficient thing to do, but still frustrating to users with the constant stream of errors and perceived injustices that came along.

The thing is, when algorithms were busy chasing after 'stop words', they kind of lost sight of the bigger picture which led to over-flagging. The same happened to image recognition systems labelling totally harmless stuff like a family photo with a toy gun as 'violent', or a sunset beach scene as containing 'nudity'. Or take mass reporting from coordinated campaigns to bury competitors. Such posts were auto-blocked even without rules violation that hit small creators hard.

Critics were pretty scathing about selective enforcement where content that happened to disagree with Meta's stance on hot topics like elections, climate change, or vaccines was getting artificially shadowbanned and having its reach reduced by up to 90% as internal leaks revealed. A disproportionate targeting was claimed, citing FTC complaints that spiked 300% from 2022-2025.

All these errors and bans were crippling people, having users locked out of their accounts and stifling the livelihoods of influencers and businesses hanging onto their visibility for survival. People felt like their freedom of expression was being shot down, which resulted in lawsuits, congressional hearings, and people just plain started to leave for platforms like X.

The pressure, combined with the fact that advertisers were starting to pull out over "uncomfortable branding", forced Meta to reassess.

Meta’s Content Moderation is Handed Back to IG Users (Again!)

Early 2025, Meta announced that they were scaling back their reliance on automated systems for searching and blocking content. Instead they’re going to try a more flexible approach: using filters to catch the really serious stuff like calls to violence, terrorism and grey content - that is to say drug trafficking and the like. But sensitive topics that Meta classifies as "less serious violations" like hate speech rely on user reports to flag up any potential issues

All Meta’s platforms – Facebook, Instagram, Threads – have rolled out Community Notes feature. Any user can add a note to a post or comment they believe is misleading, inaccurate, or ethically questionable. If a note gets enough thumbs up from the IG community, then it gets displayed right alongside the original publication, so everyone can see it, fostering open discussion and collective fact-checking

User-submitted complaints took center part. This way, dubious keywords or phrases no longer trigger automatic removals which previously provoked over-moderation. Plus human moderators can spend more time on careful reviewing which enables fairer decisions.

Meta is putting a lot of emphasis on transparency in an effort to build trust with users. Creators who find themselves restricted from content are now getting detailed explanations of the reasons behind it. And the appeals process has been streamlined so that things get sorted out quicker. On top of all that there are independent experts who will have a look at the most disputed cases to cut down on oversight.

So, blending human judgment with targeted automation is how Meta basically aimed to strike a better safety and free expression in their social media.

Unpacking the Costs of Meta’s Moderation Pivot

The third quarter reports by Meta hold that fewer mistakes they're making fewer mistakes when it comes to taking down content on their platforms:

Of the hundreds of billions of pieces of content produced on Facebook and Instagram in Q3 globally, less than 1% was removed for violating our policies and less than 0.1% was removed incorrectly. For the content that was removed, we measured our enforcement precision – that is, the percentage of correct removals out of all removals – to be more than 90% on Facebook and more than 87% on Instagram. That means about 1 out of every 10 pieces of content removed, and less than one out of every 1,000 pieces of content produced overall, was removed in error.

But there's a catch. They've narrowed down what they consider to be content that needs to be removed, so it's no real surprise that the number of mistakes shrunk as a result. On those grounds the final data can’t be completely attributed to smarter moderation systems.

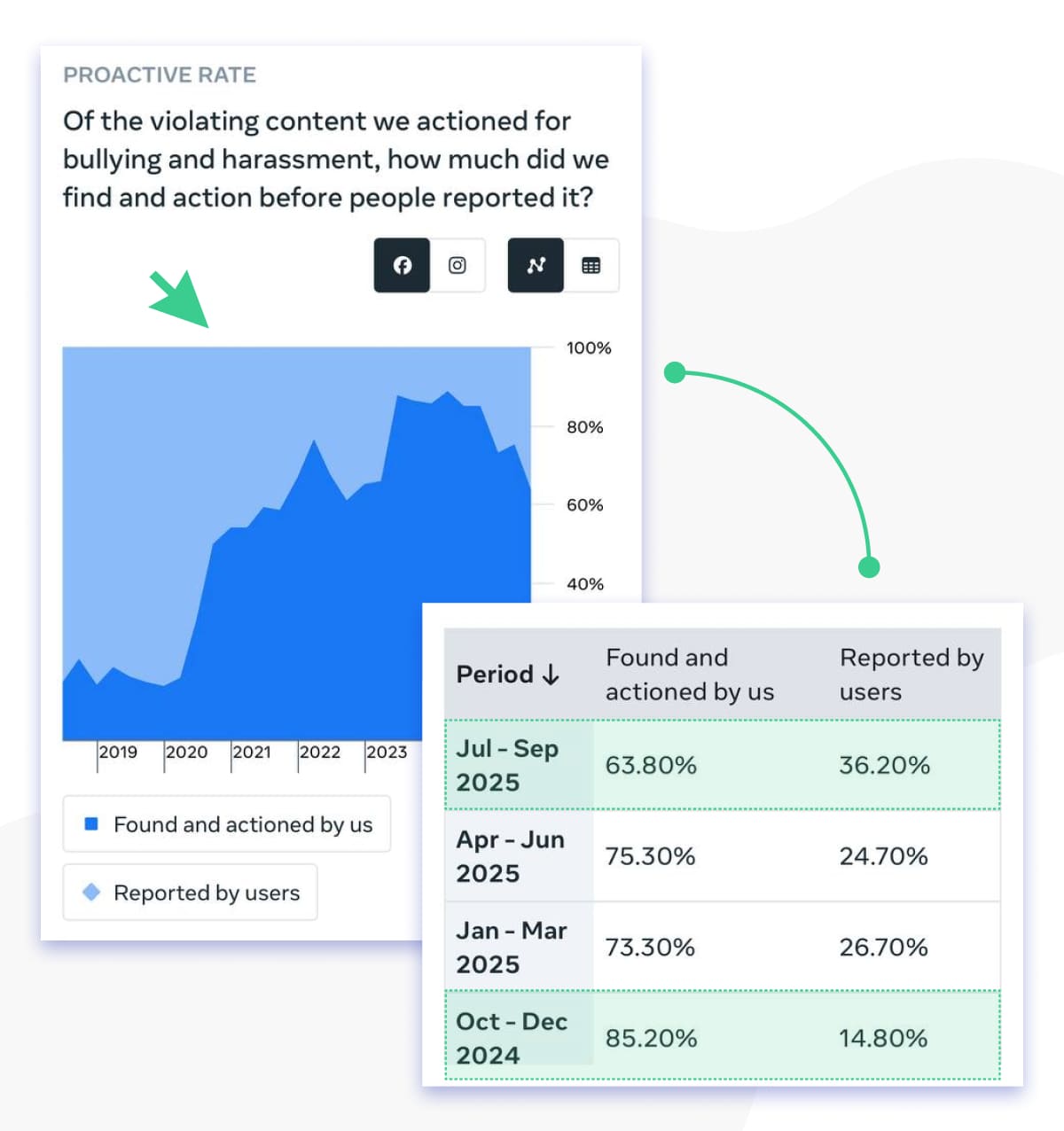

Let’s take a dive into the “Bulling and Harassment” category in the transparency reports. What we find there is that the rates of detection on their own systems went down while the number of complaints from users actually went up. With Meta’s tracking violations less straightforward, the user-submitted claims tripled. Such a gap reveals a potential vulnerability.

What that means is that their automation systems are falling short about 20% of the time. A larger share of this violative content is actually making it in front of users before they get flagged by a human. This pattern we see again in a "Hateful Conduct" chart:

Such vivid downward dynamics over several months is a pretty strong indication of a significant retreat from proactive enforcement. It hands down supports Zuckerberg’s vision when he says they're going to give more emphasis to free expression. Yet, this decision can be a critical step for the environment where unchecked uploads add to actual harm from online mobs to polarized echo chambers. With a big cut on faulty sanctions, a larger number of users across Meta’s platforms meet potentially damaging content that automated checkers could have nipped in the bud.

The company recognises it as a net positive due to fewer regulations and restrictions in the digital space. However, while they're claiming to be around 90% accurate with Community Notes, it's really hard to say how effective it is compared to AI scanning in practice. Especially if all we're looking at is how many complaints they get, which may not be the whole story.

Drilling into specific areas adds nuance. Meta showcases increased prevalence in several categories: adult nudity and sexual activity rose on both Facebook and Instagram, violent and graphic content climbed across platforms, and bulling/harassment ticked up. The company’s explanation rests on the opinion that “this is largely due to changes made during the quarter to improve reviewer training and enhance review workflows, which impacts how samples are labeled when measuring prevalence.”

So, it is hard to say if it is a genuine increase of harmful content or a cause of refined metrics. The company attributes this largely to internal adjustments, suggesting they may reflect improved accuracy rather than failure. Still, the community argues it hides the looser rules, downplaying risks for users.

Meta’s hybrid model promises balance, but early data hints at growing pains. It is still not clear if user-driven moderation can scale without sacrificing strategy.

Conclusion

Overall, there’s no major standout findings in Meta’s latest transparency reports, with its efforts to evolve moderation leading to fewer mistakes, but potentially more exposure to harm as a result. Though in Meta’s metrics, it’s doing better on this front as well.